What is sound

And its physical properties

Why does “the loudness war” exists? Why do musical instruments sound differently? What makes them sound lower and higher?

Daniel

All these questions require an understanding of the very basics of sound itself, so to answer the questions we have to go to over the fundamentals of sound and its physical properties. This topic is extremely important for music producers of all kinds, find at least 20 minutes to read it.

Sound

If we get rid of the complicated words that people use to describe things, the sound is pretty simple. Sound is a vibration. That’s it.

The beauty is that the air carries the vibration very accurately. If the object wiggles in a certain way, the air molecules around it wiggle exactly the same way. Then, getting into our ears, the air molecules cause the eardrum to vibrate exactly the same way. Nerve signals are sent to the brain, which interprets them as sounds. So we hear the sound.

Sound has three physical properties: amplitude, frequency, and waveform. Let’s take a look at all of these.

Amplitude

Imagine a string. If we pluck it, vibrations appear, and we’ll hear the sound. If we pluck it lightly, the wiggles will be weak, if we pluck it hard, the wiggles will be strong. This power, or intensity of the vibration, is the amplitude.

By hearing we perceive the amplitude as loudness: we hear light wiggles as quieter sounds, and stronger as louder ones.

However, the measurement of loudness is kinda tricky. The point is that the human ear perceives loudness logarithmically, i.e. by the ratio between the two signals. To express volume changes close to human hearing, we’ve got a unit called decibels, referred to as “dB”.

Decibel is the relative unit, it can tell how loud or quiet the sound is only relative to something. By that “something” can act as the very sound itself, or some reference level. Thus, we have two kinds of measurements with decibels: a so-called “dB Gain” and “dB Level”. I know it may sound confusing, but these things are important for understanding.

The redline: decibels explained

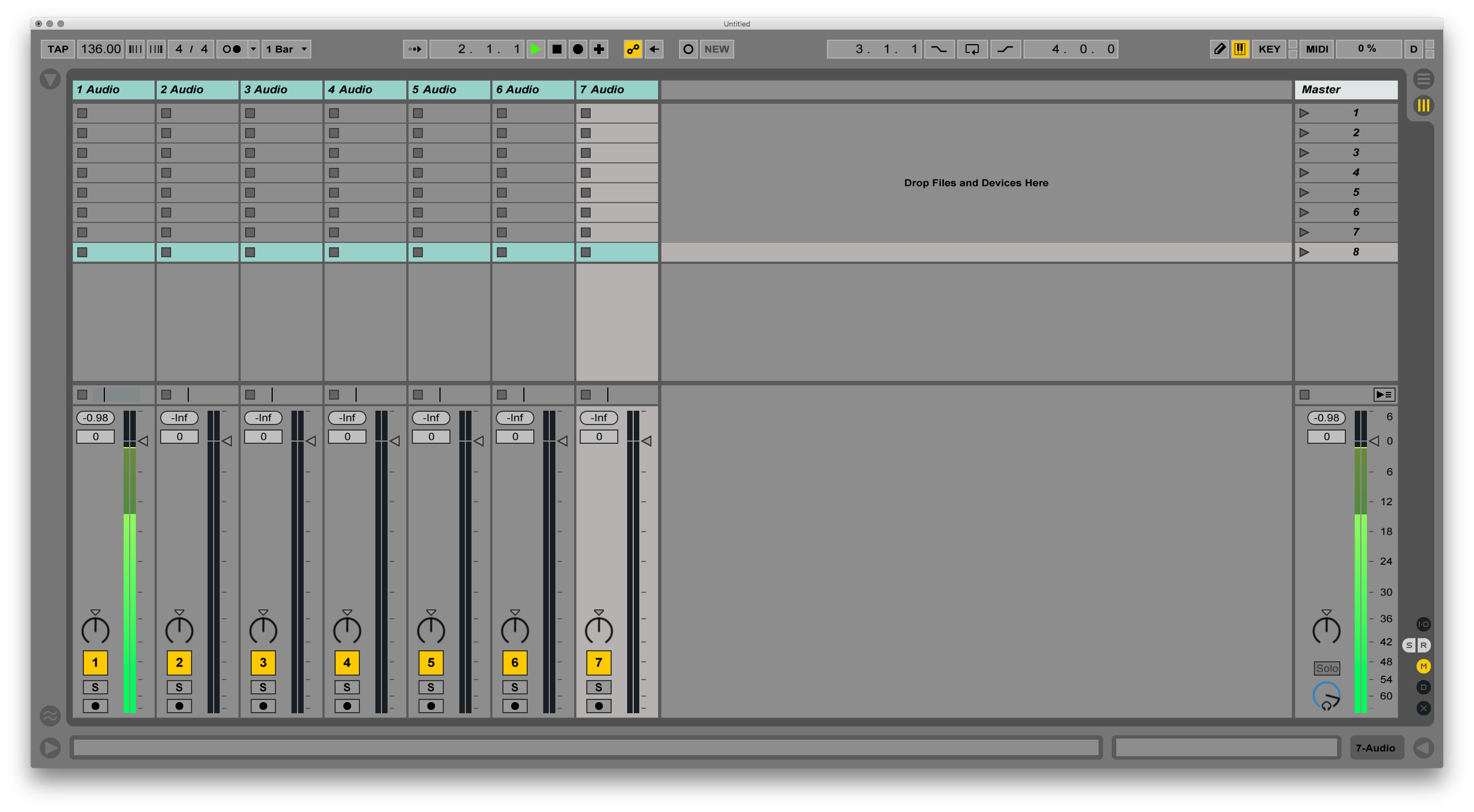

As an example of the first measurement type, the dB Gain, let’s take a channel mixer in the DAW which you might be already familiar with. If we drop down the fader to -6dB, it means nothing but that the volume will be 6dB less relative to the originally recorded sound.

The measurement of the dB Level occurs relative to some level, which is taken as the reference point for this particular scale.

One kind of such reference level is the threshold of human hearing. For its measurement, we use the decibels with the “SPL” suffix, which means sound pressure level. In this scale, zero decibels, or 0dB SPL, is the quietest sound that human hearing can perceive — it cannot go down below. To give an example: the volume of a whisper is about 30-40 dB SPL. Or: a regular conversation is around 50-60 dB SPL. The level of 120 dB SPL is considered the threshold of pain, the excess of which can cause hearing damage.

Another kind of reference level is the operating level in digital systems. The fact is that in digital audio, zero decibels is the upper threshold. Everything above is subjected to the noticeable distortion or deterioration of sound. And it’s very important to understand. As you can see, unlike the threshold of human hearing, in the digital world, the sound is counting down from its maximum value. And the decibels which are used to measure on this scale are called dB FS, where the FS refers to the “full scale”.

There are more types of decibels that are used to measure voltage and power, but I won’t talk about them in this instalment.

To summarize:

| Physical form | Scale | Meaning |

| Sound wave | 0 dB SPL | Minimum level of the human hearing |

| Digital audio | 0 dB FS | Maximum operating level of the digital systems |

The point of using decibels is that after changing the volume, for example, 6dB up or down, we will hear the sound at the same level, regardless of the sound source and the measurement scale. It is a universal unit, made to fit our non-linear perception of sound.

Frequency

The speed of the object’s vibrations is called frequency. It’s measured in hertz (as “Hz”) and in fact, very simple: one hertz is equal to one cycle of vibrations per second. The “cycle” is the full movement of vibration: back, forth, and back to its original position.

If an object wiggles less than 20 times per second, our ears will not hear the sound. The same occurs if an object wiggles more than 20000 times per second. Thus, we have a frequency range of human hearing: 20 Hz — 20000 Hz. But it is theoretical. In fact, we feel rather than hear the bottom line, while the upper limit is about 17-18 thousand hertz. Frequency below 20 Hz is called subsonic, and above 20 kHz is ultrasound. Cats can hear much more than this.

Heinrich Hertz

1857—1894

By hearing we perceive frequency as pitch. Slow wiggles we hear as low sounds, fast wiggles — as high sounds.

For instance, let’s take a standard tuned 88 keys piano. The note “A” of a fourth octave, A4, wiggles 440 times per second, i.e. on the frequency of 440 Hz. If we double this number, we’ll get the sound on octave above — A5. And the opposite, if we divide this number by half, we’ll get a lower sound — A3. Here it is, the mathematical beauty of music!

Musical Scale Frequencies Chart

Usually, the frequency spectrum range is divided into three major groups: lows, mids, and high frequencies.

| Frequency, Hz | Group |

| 20—500 | Lows |

| 500—5000 | Mids |

| 5000—20000 | Highs |

This division is pretty abstract, in addition, each group is divided into further sub-categories. But it is necessary for a general understanding of the picture.

Because of our non-linear perception of sound, there is one more interesting thing: we hear different frequencies at different volumes. Moreover, this difference varies depending on the volume of the sound source.

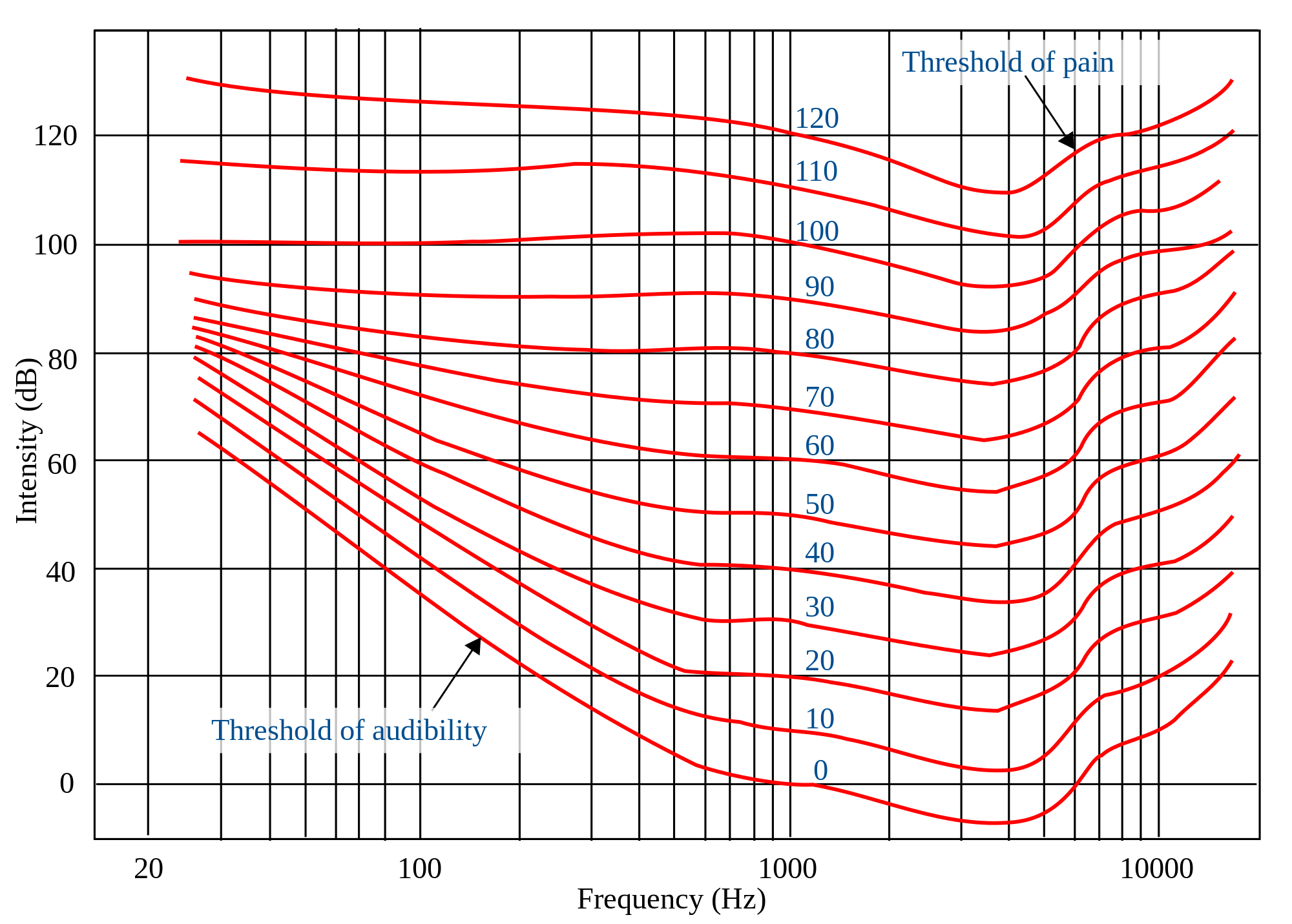

In 1933, two American physicists invented a graph, which later became known by their last names — “The Fletcher–Munson curves”.

In this graph, the loudness level is positioned vertically (relative to the human perception threshold), horizontally — the frequency range. The reference frequency, at which we perceive loudness as it is, it’s at 1000 Hertz.

These lines tell us the following. For example, let’s take a volume of 20 dB SPL. To hear the low frequency (20 Hz) as this particular level of loudness is the same as we hear 1000 Hz, it is necessary to increase low’s volume to 60 decibels. That’s huge. The overall trend is the follows: we hear the low and the high frequencies quieter than the mid ones.

That’s important to understand at least for two reasons:

- Subjectively, we perceive louder music as “better”. Hence why we have a so-called “loudness war”, when on the mastering stage engineers push the volume level so hard; objectively, it becomes worse but makes it better for our subjective perception (yes, that’s the paradox!).

- When we listen to music quietly, the frequency balance changes for our ears — for instance, we hear the bass quieter than the others. Professionals suggest writing music and doing a mixdown on the average loudness level at 80 dB SPL. But please keep in mind that music is one of the few activities which directly affects other people against their will. Respect others and don’t listen to music too loud.

Goes tight? There are plenty of tech talks below. Take a rest, and make a cup of tea.

Waveform

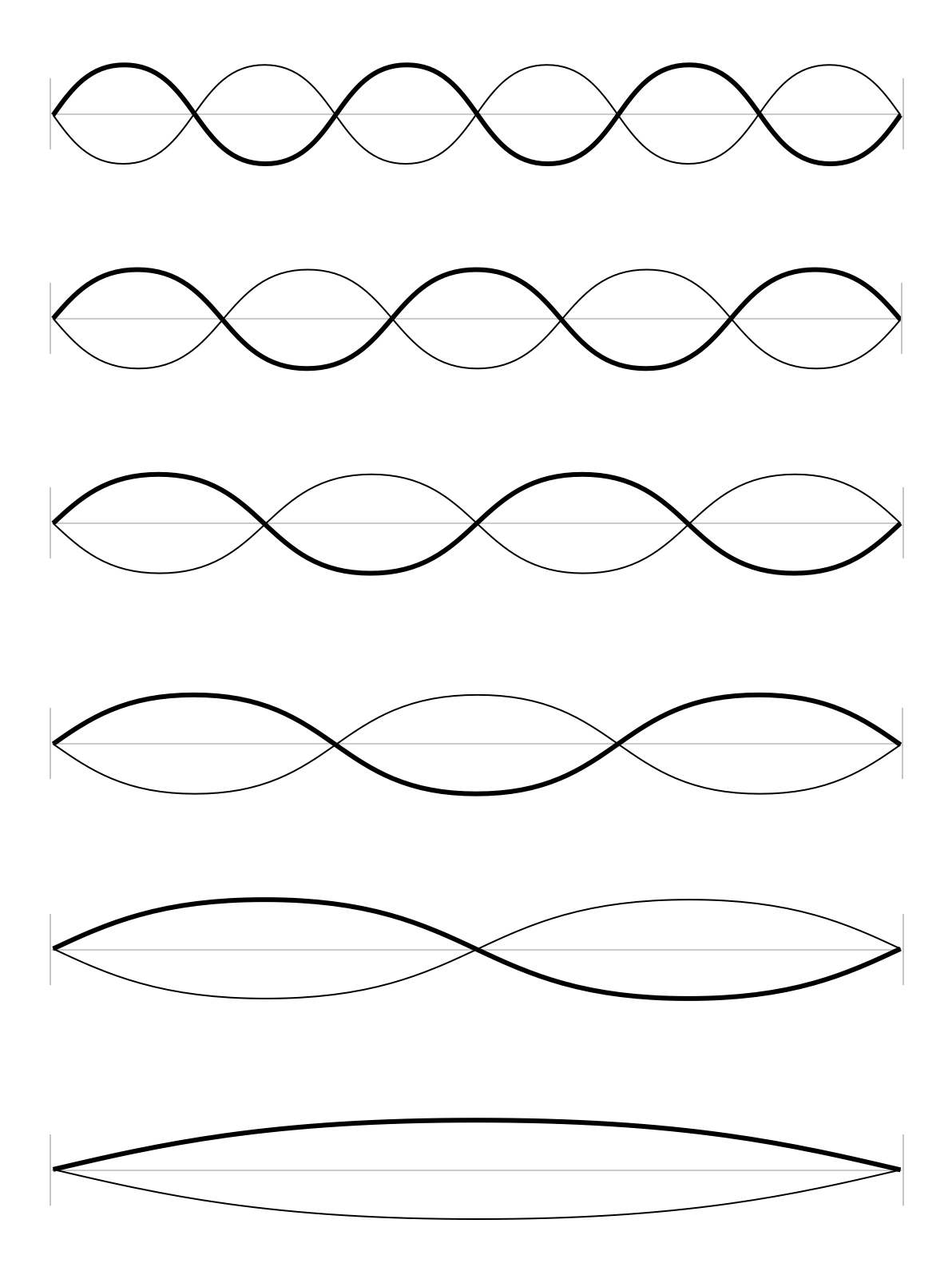

Let’s get back to our imaginary string. Now imagine a more extravagant picture. We attached a pencil to the middle of the string, and it moves up and down along with the vibration of the string. And a paper roll is moving behind this pencil. It turns out that when you pluck the string, the pencil will draw a line. This line is the waveform.

Waveform (or wave shape) is a graphic representation of an object’s vibration, which is needed to reproduce the sound. Not some abstract thing, but a specific visual graph of this particular sound.

In such a way, what sound we hear depends on the waveform. By hearing we perceive it as a timbre.

Sinewave is the simplest wave shape. However, we couldn’t find such simple sounds around us. Moreover, if the instruments had the same waveform, they would sound the same. Fortunately, the piano, the guitar, the violin and hundreds of other instruments sound differently.

In fact, an object wiggles not only in a single spot. If we pluck our imaginary string, it will also vibrate in the middle of its length, then on each third, then fourths and so on. And the smaller this interval, the faster its vibrations are. As a result, we have a series of wiggles, one simple sound on top of another one. This series of vibrations is called harmonics.

Harmonics are in mathematical relation to each other, like in harmony: the frequency of each additional vibration on top of the basic one is N times higher, where N is the number of these additional vibrations. In other words, harmonics are multiples of the initial frequency rate.

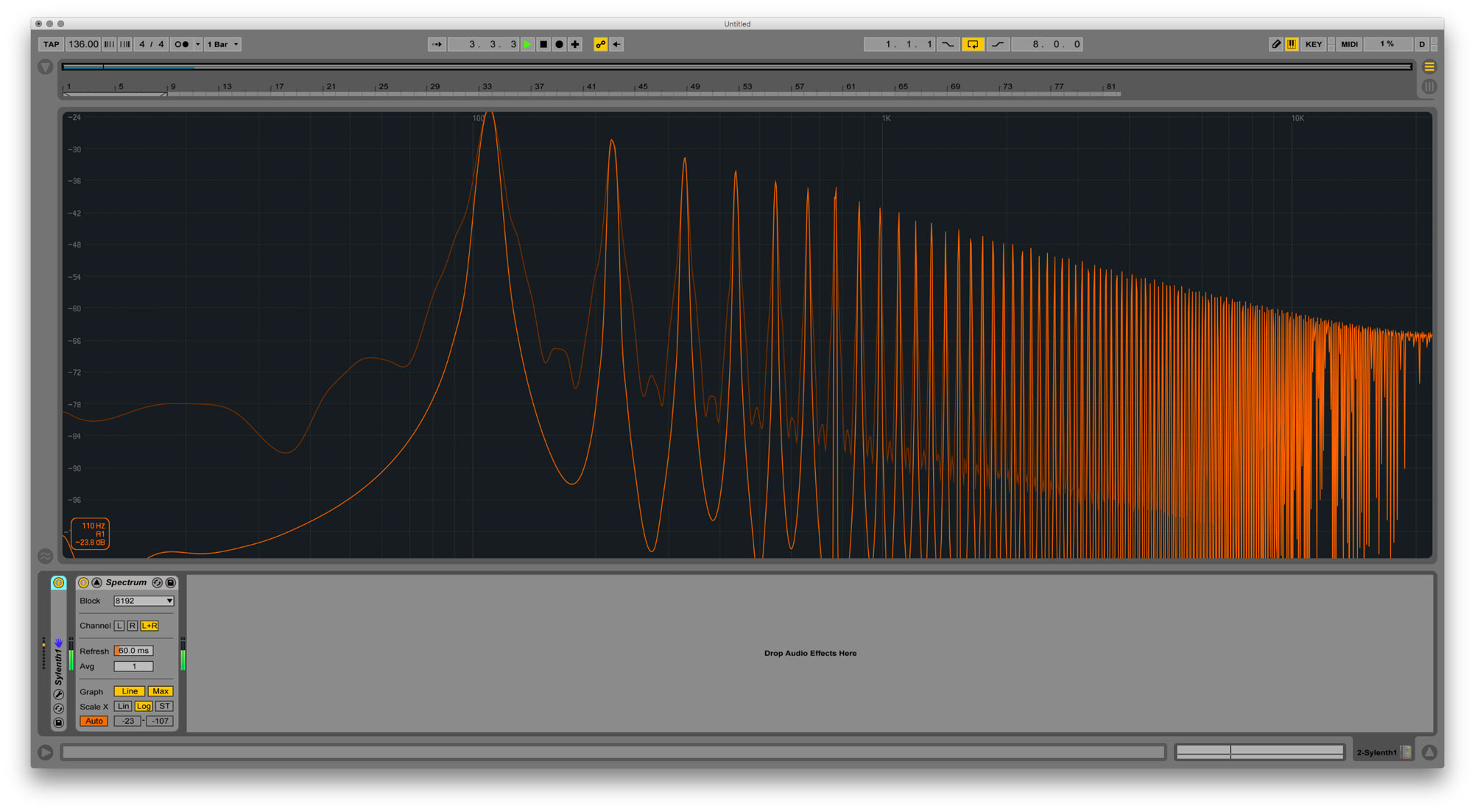

For example, let’s take some sound on the note “A” of the second octave, A2. Its frequency is 110 Hz. The frequency of the second vibrations in this harmonics is 220 Hz (2×110). The third — 330 (3×110), fourth — 440 (4×110). And so on till the spectrum’s end.

In the example above, the number of 110 — is the basic amount of these particular harmonics, which is called the fundamental frequency. And all wiggles above are called overtones.

Let’s write it down to avoid confusion:

| Name | Meaning |

| Harmonics | A series of wiggles with multiple frequency numbers |

| Fundamental | The fundamental frequency of the harmonics |

| Overtone | All wiggles above the fundamental frequency |

If vibrations are not in mathematical relation to each other, like, not in harmony, we perceive such sound as a noise. For instance, the sound of the waterfall.

Recap

- The sound has three physical properties: amplitude, frequency, and waveform. By hearing we perceive them as loudness, pitch, and timbre.

| Property | Meaning | Perception |

| Amplitude | The intensity of vibrations | Loudness |

| Frequency | The speed of vibrations | Pitch |

| Waveform | The vibrations structure | Timbre |

- The loudness is measured in decibels. The decibels show how loud the sound is only relative to something. As a standard reference level, we use two scales: the threshold of human hearing (0dB SPL — its minimum) and the operating level of digital systems (0dB FS — its maximum).

- The speed of the vibrations is measured in Hertz. The frequency range of the human hearing is 20 Hz — 20000 Hz. We divide this range into three groups: lows, mids, and high frequencies. We hear lows and highs quieter than the mid ones.

- The variety of different sounds is based on the summary of simple vibrations on top of each other — the harmonics. The harmonics are made of fundamental tone and overtones. If the vibrations are not in mathematical relation to each other, we perceive them as noise.

On cover image: vibration of a cymbal in slow-motion. In fact, the solid object acts almost like a liquid, and its vibrations cause the sound. How cool is that?

Great article.

Would love to hear some elaboration on some of the concepts, such as the fact that if sound is basically vibration, and vibration is energy, that makes sound a form of energy.

Our bodies are capable of experiencing this energy in ways other than just our hearing. This is why we can ‘feel’ sounds, e. g. bass seems to hit us in the stomach at times. I saw Leftfield play live once where they tweaked just a playing kick drum down in pitch until it got below the threshold of human hearing , then kept it there and the crowd kept moving to that beat, even though it could no longer be heard.

Also, some questions...

As psytrance and related music is designed mostly to be listened to at very loud volumes on big sound systems, should a mix be calibrated to cater to this? e. g. If you set the level of your bassline so that it sounds correct in proportion to the rest of the mix at low volume, won’t it sound too loud when played on a huge system?

Similarly with frequencies. If you have a kick drum that has a very low frequency ‘thump’, when you play that on a massive sound system with 21’’ subs, you will feel those frequencies more than hear them, but if you are on headphones or small monitors in the studio you won’t get that same feeling. How do you mix with that in mind?

Yes, that’s correct. Acoustic sound is a mechanical wave, so it can “punch you in the face” literally if you stand close to a big enough PA system.

No it shouldn’t, but it is something that producers have to keep that in mind when making music. Let’s say, if you make a track at 40 dB SPL — which is really quiet — you won’t be able to hear the bassline properly. So you think “the track has lack of bassline pressure, let’s boost it”. As result the bassline will be oversaturated at higher volume.

Luckily, there are many tools available to help musicians on that: graphic equalizers and spectrum analyzers. They help to see sound even when you can’t hear it. Look at the pictures here for example.